In my previous blog post about LocalStack S3 setup I’ve included a hardcoded delay of 5 seconds before actually calling AWS SDK. This gives enough time when waiting LocalStack to start and be ready to accept incoming requests. However, what is actually enough?

Update

Localstack now supports waiting functionality out of the box. See my example of SnsTestReceiver usage. The blogpost is not relevant any more :)

TL;DR;

The source code of my console app which waits for LocalStack S3 to start is on my GitHub.

Waiting LocalStack S3

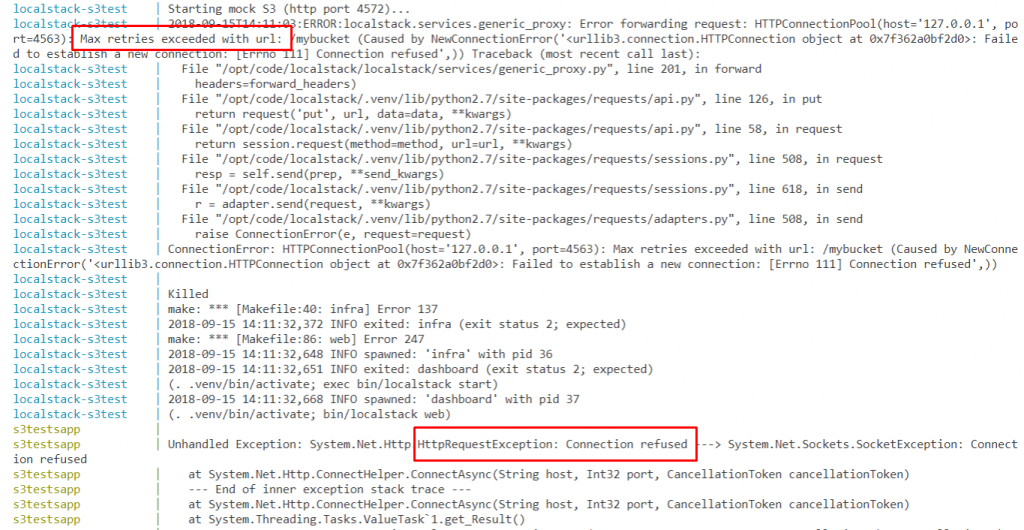

If you need to run resource hungry mocked services in dockerized environment on a busy build server, sometimes 5s might not be enough, and you would catch LocalStack off guard, and instead of receiving still busy, try again like warning message, you get an exception, and in some cases the container simply dies…

To simulate such scenario I used my previous app, and I’ve added CPU and memory limits in docker-compose.yml file.

localstack-s3test:

deploy:

resources:

limits:

cpus: '0.25'

memory: 250MStarting docker compose v3 you can only limit resources, when running in swarm mode. To work around this, need to pass “–compatibility” flag.

docker-compose --compatibility up --buildThe outcome is illustrated in following screenshots.

Giving full CPU and at 150 MB of memory was enough to run everything smoothly giving mere 5 seconds for LocalStack to get ready, however on the actual build server you might end up waiting even 60-90 seconds just to be safe…

Solution

Just for an interesting exercise, by no means production-ready code, I was looking for an alternative way to know that LocalStack is ready to accept S3 requests. While in ideal world the mock service should not die, it was an interesting research to work around this limitation.

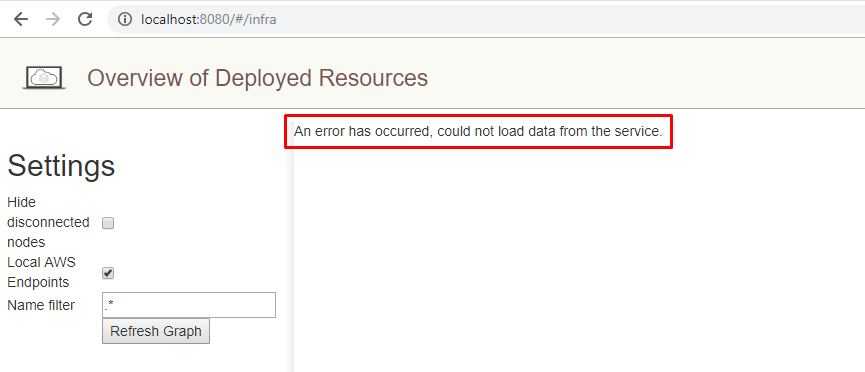

I’ve noticed that hitting Refresh button in LocalStack Dashboard calls /graph endpoint to know if and how many nodes (mock services) are available, i.e. healthy and ready to accept incoming requests. Bullseye!

And when S3 is ready, we get OK status code. We can even specify bucket name to make sure a specific service is up and running. So the idea is to send HTTP request to /graph endpoint, and retry until success response is received, or until our internal timeout is reached.

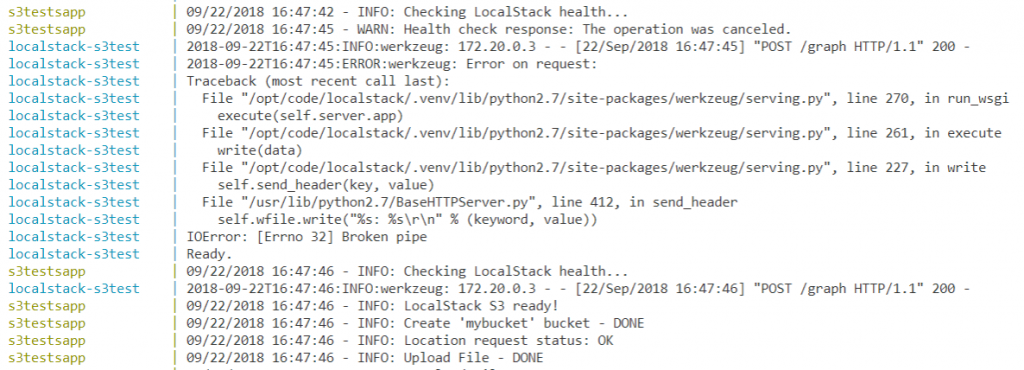

Waiting for over a minute, and observing several max retry reached exceptions, the s3testapp finally received OK response, and run all tests successfully. S3 service was still running, and Dashboard displaying it also. Looks much better, right?

Code

The idea is following:

- Create CancellationToken to set internal timeout, just not to end up waiting LocalStack to start forever :)

- While cancelation is not requested in the token, send HTTP POST request to LocalStack /graph endpoint with 3 seconds timeout.

- When HTTP request response is success, call S3 SDK to try to access and/or create the S3 bucket

- If S3 SDK call was successful, report success and break the while loop.

- And if anything failed, ie response not successful, exception thrown, 3s timeout reached, then report error, wait 1s and get back to step #2.

The actual implementation is below.

public class LocalstackSetup

{

private readonly LocalstackSettings _settings;

private readonly IAmazonS3 _s3Client;

public LocalstackSetup(LocalstackSettings settings, IS3ClientFactory s3ClientFactory)

{

_settings = settings ?? throw new ArgumentNullException(nameof(settings));

if (s3ClientFactory == null)

throw new ArgumentNullException(nameof(s3ClientFactory));

_s3Client = s3ClientFactory.CreateClient();

}

public async Task WaitForInit(TimeSpan timeout)

{

var cts = new CancellationTokenSource();

cts.CancelAfter(timeout);

var request = new GraphRequest

{

AwsEnvironment = "dev"

};

while (!cts.IsCancellationRequested)

{

try

{

using (var httpCliet = new HttpClient())

{

LogHelper.Log(LogLevel.INFO, "Checking LocalStack health...");

httpCliet.Timeout = TimeSpan.FromSeconds(3);

var content = new StringContent(JsonConvert.SerializeObject(request), Encoding.UTF8, "application/json");

var response = await httpCliet.PostAsync(_settings.DashboardGraphUrl, content, cts.Token);

if (response.IsSuccessStatusCode)

{

await _s3Client.EnsureBucketExistsAsync(_settings.Bucket);

LogHelper.Log(LogLevel.INFO, "LocalStack S3 ready!");

break;

}

}

}

catch (Exception ex)

{

LogHelper.Log(LogLevel.WARN, $"Health check response: {ex.Message}");

await Task.Delay(1000, cts.Token);

}

}

}

}And the actual call to the wait method below.

// ...

var localstackSetup = container.GetInstance<LocalstackSetup>();

await localstackSetup.WaitForInit(TimeSpan.FromSeconds(90));Downsides

While this solution works, however it has several drawbacks.

- Application code must have knowledge about the infrastructure.

- Implementation is a bit heavy, requires several classes for a simple health check.

- And need to have LocalStack Dashboard URL in your config.

Notes

Make sure to have enough memory for the container in general, or the whole container might crash with error 137.

localstack-s3test | Killed

localstack-s3test | make: *** [Makefile:40: infra] Error 137Conclusion

I would probably stick to hard-coded timeout for the sake of simplicity, unless load and resources on the build server are varying greatly to justify this implementation. Nevertheless, it was an interesting technical exercise :)

Update 27/01/2019

Upgraded source code to the latest .NET Core 2.1.

Update 03/10/2020

Use the built in start and wait functionality.